diff --git a/modules/GSOC/.deepsource.toml b/modules/GSOC/.deepsource.toml

new file mode 100644

index 0000000000000000000000000000000000000000..e6c1240dfe995df2a66f553485aa93d54e0f386d

--- /dev/null

+++ b/modules/GSOC/.deepsource.toml

@@ -0,0 +1,6 @@

+version = 1

+[[analyzers]]

+name = "python"

+enabled = true

+ [analyzers.meta]

+ runtime_version = "3.x.x"

diff --git a/modules/GSOC/E1_ShuffleNet/README.md b/modules/GSOC/E1_ShuffleNet/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..af41fca00801bcd2a74402bb77beed62fb7fdd3c

--- /dev/null

+++ b/modules/GSOC/E1_ShuffleNet/README.md

@@ -0,0 +1,29 @@

+ShuffleNet ONNX to Tensorflow Hub Module Export

+-------------------------------------------------

+**Original Repsitory for ONNX Model**: https://github.com/onnx/models/tree/master/shufflenet

+

+**Description**: Shufflenet is a Deep Convolutional Network for Classification

+

+**Original Paper**: [ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices](https://arxiv.org/abs/1707.01083)

+

+## Module Properties

+1. Load as **Channel First**

+2. Input Shape: [1, 3, 224, 224]

+2. Output Shape: [1, 1000]

+3. Download Size: 5.3 MB

+

+## Steps

+- `pip install -r requirements.txt`

+- `python3 export.py`

+

+ The Tensorflow Hub Module is Exported as *onnx/shufflenet/1*

+

+## Load and Use Model

+

+ ```python3

+ import tensorflow_hub as hub

+ module = hub.Module("onnx/shufflenet/1")

+ result = module(...)

+ ```

+ ## Important

+ The module produced is **not** functional. Check issue, https://github.com/captain-pool/GSOC/issues/3

diff --git a/modules/GSOC/E1_ShuffleNet/export.py b/modules/GSOC/E1_ShuffleNet/export.py

new file mode 100644

index 0000000000000000000000000000000000000000..3363f02f1a7d859512cd2bd3d09a5b636e021b96

--- /dev/null

+++ b/modules/GSOC/E1_ShuffleNet/export.py

@@ -0,0 +1,80 @@

+# Copyright 2018 The TensorFlow Hub Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ==============================================================================

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+import onnx

+from onnx_tf.backend import prepare

+import tensorflow as tf

+import tensorflow_hub as hub

+import os

+import io

+import requests

+from tqdm import tqdm

+import tarfile

+

+DOWNLOAD_LINK = "https://s3.amazonaws.com/download.onnx/models/opset_8/shufflenet.tar.gz"

+SHUFFLENET_PB = "shufflenet.pb"

+

+

+def load_shufflenet():

+ # Download Shufflenet if it doesn't exist

+ if not os.path.exists(DOWNLOAD_LINK.split("/")[-1]):

+ response = requests.get(DOWNLOAD_LINK, stream=True)

+ with open(DOWNLOAD_LINK.split("/")[-1], "wb") as handle:

+ for data in tqdm(

+ response.iter_content(

+ chunk_size=io.DEFAULT_BUFFER_SIZE),

+ total=int(

+ response.headers['Content-length']) //

+ io.DEFAULT_BUFFER_SIZE,

+ desc="Downloading"):

+ handle.write(data)

+ tar = tarfile.open(DOWNLOAD_LINK.split("/")[-1])

+ tar.extractall()

+ tar.close()

+ # Export Protobuf File if not present

+ if not os.path.exists(SHUFFLENET_PB):

+ model = onnx.load("shufflenet/model.onnx")

+ tf_rep = prepare(model)

+ tf_rep.export_graph(SHUFFLENET_PB)

+

+

+def module_fn():

+ input_name = "gpu_0/data_0:0"

+ output_name = "Softmax:0"

+ graph_def = tf.GraphDef()

+ with tf.gfile.GFile(SHUFFLENET_PB, 'rb') as f:

+ graph_def.ParseFromString(f.read())

+ input_tensor = tf.placeholder(tf.float32, shape=[1, 3, 224, 224])

+ output_tensor, = tf.import_graph_def(

+ graph_def, input_map={

+ input_name: input_tensor}, return_elements=[output_name])

+ hub.add_signature(inputs=input_tensor, outputs=output_tensor)

+

+

+def main():

+ load_shufflenet()

+ spec = hub.create_module_spec(module_fn)

+ module = hub.Module(spec)

+ with tf.Session() as sess:

+ module.export("onnx/shufflenet/1", sess)

+ print("Exported")

+

+

+if __name__ == "__main__":

+ main()

diff --git a/modules/GSOC/E1_TFHub_Sample_Deploy/BUILD b/modules/GSOC/E1_TFHub_Sample_Deploy/BUILD

new file mode 100644

index 0000000000000000000000000000000000000000..9c06a633d5651ac7cf0eb0e5ecbf9b1c6807c172

--- /dev/null

+++ b/modules/GSOC/E1_TFHub_Sample_Deploy/BUILD

@@ -0,0 +1,39 @@

+py_binary(

+ name = "export",

+ srcs = ["export.py"],

+ python_version = "PY3",

+ srcs_version = "PY2AND3",

+ deps = [":export_lib"],

+)

+

+py_library(

+ name = "export_lib",

+ srcs = ["export.py"],

+ srcs_version = "PY2AND3",

+ deps = [

+ ":expect_numpy_installed",

+ ":expect_tensorflow_installed",

+ "//external:tensorflow_hub",

+ ],

+)

+

+py_test(

+ name = "export_test",

+ srcs = ["export_test.py"],

+ python_version = "PY3",

+ srcs_version = "PY2AND3",

+ deps = [

+ ":expect_numpy_installed",

+ ":expect_tensorflow_installed",

+ ":export_lib",

+ "//external:tensorflow_hub",

+ ],

+)

+

+py_library(

+ name = "expect_tensorflow_installed",

+)

+

+py_library(

+ name = "expect_numpy_installed",

+)

diff --git a/modules/GSOC/E1_TFHub_Sample_Deploy/README.md b/modules/GSOC/E1_TFHub_Sample_Deploy/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..c474bd65810957460f94f95cbaaece28a90520a6

--- /dev/null

+++ b/modules/GSOC/E1_TFHub_Sample_Deploy/README.md

@@ -0,0 +1,8 @@

+Sample MNIST Graph to export as Tensorflow Hub Module

+------------------------------------------------------

+### To Export

+```bash

+$ python3 export.py

+```

+### to run The Tests

+- `$ bazel test E1_TFHub_Sample_Deploy:export_test`

diff --git a/modules/GSOC/E1_TFHub_Sample_Deploy/export.py b/modules/GSOC/E1_TFHub_Sample_Deploy/export.py

new file mode 100644

index 0000000000000000000000000000000000000000..7cf1a36183adbf5156a4365af29c5056721da4c8

--- /dev/null

+++ b/modules/GSOC/E1_TFHub_Sample_Deploy/export.py

@@ -0,0 +1,144 @@

+import sys

+import tensorflow as tf

+import tensorflow_datasets as tfds

+import tensorflow_hub as hub

+import argparse

+

+"""Exporter for TF-Hub Modules in SavedModel v2.0 format.

+

+The module has as a single signature, accepting a batch of images with shape [None, 28, 28, 1] and returning a prediction vector.

+In this example, we are loading the MNIST Dataset from TFDS and training a simple digit classifier.

+"""

+

+FLAGS = None

+

+

+class MNIST(tf.keras.models.Model):

+ """Model representing a MNIST classifier"""

+

+ def __init__(self, output_activation="softmax"):

+ """

+ Args:

+ output_activation (str): activation for last layer.

+ """

+ super(MNIST, self).__init__()

+ self.layer_1 = tf.keras.layers.Dense(64)

+ self.layer_2 = tf.keras.layers.Dense(10, activation=output_activation)

+

+ @tf.function(

+ input_signature=[

+ tf.TensorSpec(

+ shape=[None, 28, 28, 1],

+ dtype=tf.uint8)])

+ def call(self, inputs):

+ casted = tf.keras.layers.Lambda(

+ lambda x: tf.cast(x, tf.float32))(inputs)

+ flatten = tf.keras.layers.Flatten()(casted)

+ normalize = tf.keras.layers.Lambda(

+ lambda x: x / tf.reduce_max(tf.gather(x, 0)))(flatten)

+ x = self.layer_1(normalize)

+ output = self.layer_2(x)

+ return output

+

+

+def train_step(model, loss_fn, optimizer_fn, metric, image, label):

+ """ Perform one training step for the model.

+ Args:

+ model : Keras model to train.

+ loss_fn : Loss function to use.

+ optimizer_fn: Optimizer function to use.

+ metric : keras.metric to use.

+ image : Tensor of training images of shape [batch_size, 28, 28, 1].

+ label : Tensor of class labels of shape [batch_size, ]

+ """

+ with tf.GradientTape() as tape:

+ preds = model(image)

+ label_onehot = tf.one_hot(label, 10)

+ loss_ = loss_fn(label_onehot, preds)

+ grads = tape.gradient(loss_, model.trainable_variables)

+ optimizer_fn.apply_gradients(zip(grads, model.trainable_variables))

+ metric(loss_)

+

+

+def train_and_export(

+ data_dir=None,

+ buffer_size=1000,

+ batch_size=32,

+ learning_rate=1e-3,

+ epoch=10,

+ dataset=None,

+ export_path="/tmp/tfhub_modules/mnist/digits/1"):

+ """

+ Trains and export the model as SavedModel 2.0.

+ Args:

+ data_dir (str) : Directory where to store datasets from TFDS.

+ (With proper authentication, cloud bucket supported).

+ buffer_size (int) : Size of buffer to use while shuffling.

+ batch_size (int) : Size of each training batch.

+ learning_rate (float) : Learning rate to use for the optimizer.

+ epoch (int) : Number of Epochs to train for.

+ dataset (tf.data.Dataset): Dataset object if data_dir is not provided.

+ export_path (str) : Path to export the trained model.

+ """

+ model = MNIST()

+ if not dataset:

+ train = tfds.load(

+ "mnist",

+ split="train",

+ data_dir=data_dir,

+ batch_size=batch_size).shuffle(

+ buffer_size,

+ reshuffle_each_iteration=True)

+ else:

+ train = dataset

+ optimizer_fn = tf.optimizers.Adam(learning_rate=learning_rate)

+ loss_fn = tf.keras.losses.CategoricalCrossentropy(from_logits=True)

+ metric = tf.keras.metrics.Mean()

+ model.compile(optimizer_fn, loss=loss_fn)

+ # Training Loop

+ for epoch in range(epoch):

+ for step, data in enumerate(train):

+ train_step(

+ model,

+ loss_fn,

+ optimizer_fn,

+ metric,

+ data['image'],

+ data['label'])

+ sys.stdout.write("\rEpoch: #{}\tStep: #{}\tLoss: {}".format(

+ epoch, step, metric.result().numpy()))

+ # Exporting Model as SavedModel 2.0

+ tf.saved_model.save(model, export_path)

+

+

+if __name__ == "__main__":

+ parser = argparse.ArgumentParser()

+ parser.add_argument(

+ "--export_path",

+ type=str,

+ default="/tmp/tfhub_modules/mnist/digits/1",

+ help="Path to export the module")

+ parser.add_argument("--data_dir", type=str, default=None,

+ help="Path to dustom TFDS data directory")

+ parser.add_argument(

+ "--buffer_size",

+ type=int,

+ default=1000,

+ help="Buffer Size to use while shuffling the dataset")

+ parser.add_argument(

+ "--batch_size",

+ type=int,

+ default=32,

+ help="Size of each batch")

+ parser.add_argument(

+ "--learning_rate",

+ type=float,

+ default=1e-3,

+ help="learning rate")

+ parser.add_argument(

+ "--epoch",

+ type=int,

+ default=10,

+ help="Number of iterations")

+ FLAGS, unparsed = parser.parse_known_args()

+ train_and_export(**vars(FLAGS))

diff --git a/modules/GSOC/E1_TFHub_Sample_Deploy/export_test.py b/modules/GSOC/E1_TFHub_Sample_Deploy/export_test.py

new file mode 100644

index 0000000000000000000000000000000000000000..2e5e33dbd07c31f59c2d2b041fd246b405ca9b1a

--- /dev/null

+++ b/modules/GSOC/E1_TFHub_Sample_Deploy/export_test.py

@@ -0,0 +1,39 @@

+import tensorflow as tf

+import tensorflow_hub as hub

+import unittest

+import tempfile

+import os

+import re

+import export

+

+

+class TFHubMNISTTest(tf.test.TestCase):

+ def setUp(self):

+ self.mock_dataset = tf.data.Dataset.range(5).map(

+ lambda x: {

+ "image": tf.cast(

+ 255 * tf.random.normal([1, 28, 28, 1]), tf.uint8),

+ "label": x})

+

+ def test_model_exporting(self):

+ export.train_and_export(

+ epoch=1,

+ dataset=self.mock_dataset,

+ export_path="%s/model/1" %

+ self.get_temp_dir())

+ self.assertTrue(os.listdir(self.get_temp_dir()))

+

+ def test_empty_input(self):

+ export.train_and_export(

+ epoch=1,

+ dataset=self.mock_dataset,

+ export_path="%s/model/1" %

+ self.get_temp_dir())

+ model = hub.load("%s/model/1" % self.get_temp_dir())

+ output_ = model.call(

+ tf.zeros([1, 28, 28, 1], dtype=tf.uint8).numpy())

+ self.assertEqual(output_.shape, [1, 10])

+

+

+if __name__ == '__main__':

+ tf.test.main()

diff --git a/modules/GSOC/E1_TPU_Sample/README.md b/modules/GSOC/E1_TPU_Sample/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..3663ea684db120ed98879198c3ea8d4b4740bef1

--- /dev/null

+++ b/modules/GSOC/E1_TPU_Sample/README.md

@@ -0,0 +1,74 @@

+# Image Retraining Sample

+

+**Topics:** Tensorflow 2.0, TF Hub, Cloud TPU

+

+## Specs

+### Cloud TPU

+

+**TPU Type:** v2.8

+**Tensorflow Version:** 1.14

+

+### Cloud VM

+

+**Machine Type:** n1-standard-2

+**OS**: Debian 9

+**Tensorflow Version**: Came with tf-nightly. Manually installed Tensorflow 2.0 Beta

+

+Launching Instance and VM

+---------------------------

+- Open Google Cloud Shell

+- `ctpu up -tf-version 1.14`

+- If cloud bucket is not setup automatically, create a cloud storage bucket

+with the same name as TPU and the VM

+- enable HTTP traffic for the VM instance

+- SSH into the system

+ - `pip3 uninstall -y tf-nightly`

+ - `pip3 install -r requirements.txt`

+ - `export CTPU_NAME=<common name of the tpu, vm and bucket>`

+

+

+Running Tensorboard:

+----------------------

+### Pre Requisites

+```bash

+$ sudo -i

+$ pip3 uninstall -y tf-nightly

+$ pip3 install tensorflow==2.0.0-beta0

+$ exit

+```

+

+### Launch

+```bash

+$ sudo tensorboard --logdir gs://$CTPU_NAME/model_dir --port 80 &>/dev/null &

+```

+To view Tensorboard, Browse to the Public IP of the VM Instance

+

+Running the Code:

+----------------------

+#### Train The Model

+

+```bash

+$ python3 image_retraining_tpu.py --tpu $CTPU_NAME --use_tpu \

+--modeldir gs://$CTPU_NAME/modeldir \

+--datadir gs://$CTPU_NAME/datadir \

+--logdir gs://$CTPU_NAME/logdir \

+--num_steps 2000 \

+--dataset horses_or_humans

+```

+Training Saves one single checkpoint at the end of training. This checkpoint can be loaded up

+later to export a SavedModel from it.

+

+#### Export Model

+

+```bash

+$ python3 image_retraining_tpu.py --tpu $CTPU_NAME --use_tpu \

+--modeldir gs://$CTPU_NAME/modeldir \

+--datadir gs://$CTPU_NAME/datadir \

+--logdir gs://$CTPU_NAME/logdir \

+--dataset horses_or_humans \

+--export_only \

+--export_path modeldir/model

+```

+Exporting SavedModel of trained model

+----------------------------

+The trained model gets saved at `gs://$CTPU_NAME/modeldir/model` by default if the path is not explicitly stated using `--export_path`

diff --git a/modules/GSOC/E1_TPU_Sample/image_retraining_tpu_strategy.py b/modules/GSOC/E1_TPU_Sample/image_retraining_tpu_strategy.py

new file mode 100644

index 0000000000000000000000000000000000000000..c820b1e67db4918759c13bd0a9b9cc881f60827a

--- /dev/null

+++ b/modules/GSOC/E1_TPU_Sample/image_retraining_tpu_strategy.py

@@ -0,0 +1,268 @@

+# Copyright 2019 The TensorFlow Hub Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ==============================================================================

+""" TensorFlow Sample for running TPU Training """

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+import os

+

+import argparse

+from absl import logging

+import tensorflow as tf

+import tensorflow_hub as hub

+import tensorflow_datasets as tfds

+

+tf.compat.v2.enable_v2_behavior()

+os.environ["TFHUB_DOWNLOAD_PROGRESS"] = "True"

+

+PRETRAINED_KERAS_LAYER = "https://tfhub.dev/google/tf2-preview/inception_v3/feature_vector/4"

+BATCH_SIZE = 32 # In case of TPU, Must be a multiple of 8

+

+

+class SingleDeviceStrategy(object):

+ """ Dummy Class to mimic tf.distribute.Strategy for Single Devices """

+

+ def __enter__(self, *args, **kwargs):

+ pass

+

+ def __exit__(self, *args, **kwargs):

+ pass

+

+ def scope(self):

+ return self

+

+ def experimental_distribute_dataset(self, dataset):

+ return dataset

+

+ def experimental_run_v2(self, func, args, kwargs):

+ return func(*args, **kwargs)

+

+ def reduce(self, reduction_type, distributed_data, axis): # pylint: disable=unused-argument

+ return distributed_data

+

+

+class Model(tf.keras.models.Model):

+ """ Keras Model class for Image Retraining """

+

+ def __init__(self, num_classes):

+ super(Model, self).__init__()

+ logging.info("Loading Pretrained Image Vectorizer")

+ self._pretrained_layer = hub.KerasLayer(

+ PRETRAINED_KERAS_LAYER,

+ output_shape=[2048],

+ trainable=False)

+ self._dense_1 = tf.keras.layers.Dense(num_classes, activation="sigmoid")

+

+ @tf.function(

+ input_signature=[

+ tf.TensorSpec(

+ shape=[None, None, None, 3],

+ dtype=tf.float32)])

+ def call(self, inputs):

+ return self.unsigned_call(inputs)

+

+ def unsigned_call(self, inputs):

+ intermediate = self._pretrained_layer(inputs)

+ return self._dense_1(intermediate)

+

+

+def connect_to_tpu(tpu=None):

+ if tpu:

+ cluster_resolver = tf.distribute.cluster_resolver.TPUClusterResolver(tpu)

+ tf.config.experimental_connect_to_host(cluster_resolver.get_master())

+ tf.tpu.experimental.initialize_tpu_system(cluster_resolver)

+ strategy = tf.distribute.experimental.TPUStrategy(cluster_resolver)

+ return strategy, "/task:1" if os.environ.get("COLAB_TPU_ADDR") else "/job:worker"

+ return SingleDeviceStrategy(), ""

+

+

+def load_dataset(name, datadir, batch_size=32, shuffle=None):

+ """

+ Loads and preprocesses dataset from TensorFlow dataset.

+ Args:

+ name: Name of the dataset to load

+ datadir: Directory to the dataset in.

+ batch_size: size of each minibatch. Must be a multiple of 8.

+ shuffle: size of shuffle buffer to use. Not shuffled if set to None.

+ """

+ dataset, info = tfds.load(

+ name,

+ try_gcs=True,

+ data_dir=datadir,

+ split="train",

+ as_supervised=True,

+ with_info=True)

+ num_classes = info.features["label"].num_classes

+

+ def _scale_fn(image, label):

+ image = tf.cast(image, tf.float32)

+ image = image / 127.5

+ image -= 1.

+ label = tf.one_hot(label, num_classes)

+ label = tf.cast(label, tf.float32)

+ return image, label

+

+ options = tf.data.Options()

+ if not hasattr(tf.data.Options, "auto_shard"):

+ options.experimental_distribute.auto_shard = False

+ else:

+ options.auto_shard = False

+

+ dataset = (

+ dataset.map(

+ _scale_fn,

+ num_parallel_calls=tf.data.experimental.AUTOTUNE)

+ .with_options(options)

+ .batch(batch_size, drop_remainder=True))

+ if shuffle:

+ dataset = dataset.shuffle(shuffle, reshuffle_each_iteration=True)

+ return dataset.repeat(), num_classes

+

+

+def train_and_export(**kwargs):

+ """

+ Trains the model and exports as SavedModel.

+ Args:

+ tpu: Name or GRPC address of the TPU to use.

+ logdir: Path to a bucket or directory to store TensorBoard logs.

+ modeldir: Path to a bucket or directory to store the model.

+ datadir: Path to store the downloaded datasets to.

+ dataset: Name of the dataset to load from TensorFlow Datasets.

+ num_steps: Number of steps to train the model for.

+ """

+ if kwargs["tpu"]:

+ # For TPU Training the Files must be stored in

+ # Cloud Buckets for the TPU to access

+ if not kwargs["logdir"].startswith("gs://"):

+ raise ValueError("To train on TPU. `logdir` must be cloud bucket")

+ if not kwargs["modeldir"].startswith("gs://"):

+ raise ValueError("To train on TPU. `modeldir` must be cloud bucket")

+ if kwargs["datadir"]:

+ if not kwargs["datadir"].startswith("gs://"):

+ raise ValueError("To train on TPU. `datadir` must be a cloud bucket")

+

+ os.environ["TFHUB_CACHE_DIR"] = os.path.join(

+ kwargs["modeldir"], "tfhub_cache")

+

+ strategy, device = connect_to_tpu((not kwargs["export_only"]) and kwargs["tpu"])

+ with tf.device(device), strategy.scope():

+ summary_writer = tf.summary.create_file_writer(kwargs["logdir"])

+ dataset, num_classes = load_dataset(

+ kwargs["dataset"],

+ kwargs["datadir"],

+ shuffle=3 * 32,

+ batch_size=BATCH_SIZE)

+ dataset = iter(strategy.experimental_distribute_dataset(dataset))

+ model = Model(num_classes)

+ loss_metric = tf.keras.metrics.Mean()

+ optimizer = tf.keras.optimizers.Adam()

+ ckpt = tf.train.Checkpoint(model=model)

+ def distributed_step(images, labels):

+ with tf.GradientTape() as tape:

+ logging.info("Taking predictions")

+ predictions = model.unsigned_call(images)

+ logging.info("Calculating loss")

+ loss = tf.nn.sigmoid_cross_entropy_with_logits(labels, predictions)

+ loss_metric(loss)

+ loss = loss * (1.0 / BATCH_SIZE)

+ logging.info("Calculating gradients")

+ gradient = tape.gradient(loss, model.trainable_variables)

+ logging.info("Applying gradients")

+ train_op = optimizer.apply_gradients(zip(gradient, model.trainable_variables))

+ with tf.control_dependencies([train_op]):

+ return tf.cast(optimizer.iterations, tf.float32)

+

+ @tf.function

+ def train_step(image, label):

+ distributed_metric = strategy.experimental_run_v2(

+ distributed_step, args=[image, label])

+ step = strategy.reduce(

+ tf.distribute.ReduceOp.MEAN, distributed_metric, axis=None)

+ return step

+ if not kwargs["export_only"]:

+ logging.info("Starting Training")

+ while not kwargs["export_only"]:

+ image, label = next(dataset)

+ step = tf.cast(train_step(image, label), tf.uint8)

+ with summary_writer.as_default():

+ tf.summary.scalar("loss", loss_metric.result(), step=optimizer.iterations)

+ if step % 100:

+ logging.info("Step: #%f\tLoss: %f" % (step, loss_metric.result()))

+ if step >= kwargs["num_steps"]:

+ ckpt.save(file_prefix=os.path.join(kwargs["modeldir"], "checkpoint"))

+ break

+ logging.info("Exporting Saved Model")

+ export_path = (kwargs["export_path"]

+ or os.path.join(kwargs["modeldir"], "model"))

+ ckpt.restore(

+ tf.train.latest_checkpoint(

+ os.path.join(kwargs["modeldir"], "checkpoint")))

+ logging.info("Consuming checkpoint and tracing function")

+ model(tf.random.normal([1, 200, 200, 3]))

+ tf.saved_model.save(model, export_path)

+

+

+if __name__ == "__main__":

+ parser = argparse.ArgumentParser()

+ parser.add_argument(

+ "--dataset",

+ default=None,

+ help="Name of the Dataset to use")

+ parser.add_argument(

+ "--datadir",

+ default=None,

+ help="Directory to store the downloaded Dataset")

+ parser.add_argument(

+ "--modeldir",

+ default=None,

+ help="Directory to store the SavedModel to")

+ parser.add_argument(

+ "--logdir",

+ default=None,

+ help="Directory to store the Tensorboard logs")

+ parser.add_argument(

+ "--tpu",

+ default=None,

+ help="name or GRPC address of the TPU")

+ parser.add_argument(

+ "--num_steps",

+ default=1000,

+ type=int,

+ help="Number of Steps to train the model for")

+ parser.add_argument(

+ "--export_path",

+ default=None,

+ help="Explicitly specify the export path of the model."

+ "Else `modeldir/model` wil be used.")

+ parser.add_argument(

+ "--export_only",

+ default=False,

+ action="store_true",

+ help="Only export the SavedModel from presaved checkpoints")

+ parser.add_argument(

+ "--verbose",

+ "-v",

+ default=0,

+ action="count",

+ help="increase verbosity. multiple tags to increase more")

+ flags, unknown = parser.parse_known_args()

+ log_levels = [logging.FATAL, logging.WARNING, logging.INFO, logging.DEBUG]

+ log_level = log_levels[min(flags.verbose, len(log_levels) - 1)]

+ if not flags.modeldir:

+ logging.fatal("`--modeldir` must be specified")

+ sys.exit(1)

+ logging.set_verbosity(log_level)

+ train_and_export(**vars(flags))

diff --git a/modules/GSOC/E2_ESRGAN/README.md b/modules/GSOC/E2_ESRGAN/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..3bc459acf267b6e32a6004f3bfb526adec58a32e

--- /dev/null

+++ b/modules/GSOC/E2_ESRGAN/README.md

@@ -0,0 +1,50 @@

+# Enhanced Super Resolution GAN

+Tensorflow 2.0 Implementation of Enhanced Super Resolution Generative Adversarial Network (Xintao et. al.)

+[https://arxiv.org/pdf/1809.00219.pdf](https://arxiv.org/pdf/1809.00219.pdf)

+

+Enhanced Super Resolution GAN implemented as a part of Google Summer of Code 2019. [https://summerofcode.withgoogle.com/projects/#4662790671826944](https://summerofcode.withgoogle.com/projects/#4662790671826944)

+The SavedModel is expected to be shipped as a Tensorflow Hub Module. [https://tfhub.dev/](https://tfhub.dev/)

+

+## Overview

+Enhanced Super Resolution GAN is an improved version of Super Resolution GAN (Ledig et.al.) [https://arxiv.org/abs/1609.04802](https://arxiv.org/abs/1609.04802).

+The Model uses Residual-in-Residual Block, as the basic convolutional block instead of the basic Residual Network or simple Convolution trunk to provide a better flow of gradients at the microscopic level.

+In addition to that the model lacks Batch Normalization layers, in the Generator to prevent smoothing out of the artifacts in the image. This allows

+ESRGAN to produce images having better approximation of the sharp edges of the image artifacts.

+ESRGAN uses a Relativistic Discriminator [https://arxiv.org/pdf/1807.00734.pdf](https://arxiv.org/pdf/1807.00734.pdf) to better approximate the probability of an

+image being real or fake thus producing better result.

+The generator uses a linear combination of Perceptual difference between real and fake image (using pretrained VGG19 Network), Pixelwise absolute difference between real and fake image

+and Relativistic Average Loss between the real and fake image as loss function during adversarial training.

+The generator is trained in a two phase training setup.

+- First Phase focuses on reducing the Pixelwise L1 Distance of the input and target high resolution image to prevent local minimas

+obtained while starting from complete randomness.

+- Second Phase focuses on creating sharper and better reconstruction of minute artifacts.

+

+The final trained model is then interpolated between the L1 loss model and adversarially trained model, to produce photo realistic

+reconstruction.

+## Example Usage

+```python3

+import tensorflow_hub as hub

+import tensorflow as tf

+model = hub.load("https://github.com/captain-pool/GSOC/releases/download/1.0.0/esrgan.tar.gz")

+super_resolution = model(LOW_RESOLUTION_IMAGE_OF_SHAPE=[BATCH, HEIGHT, WIDTH, 3])

+# Output Shape: [BATCH, 4 x HEIGHT, 4 x WIDTH, 3]

+# Output DType: tf.float32.

+# NOTE:

+# The values are needed to be clipped between [0, 255]

+# using tf.clip_by_value(...) and casted to tf.uint8 using tf.cast(...)

+# before plotting or saving as image

+```

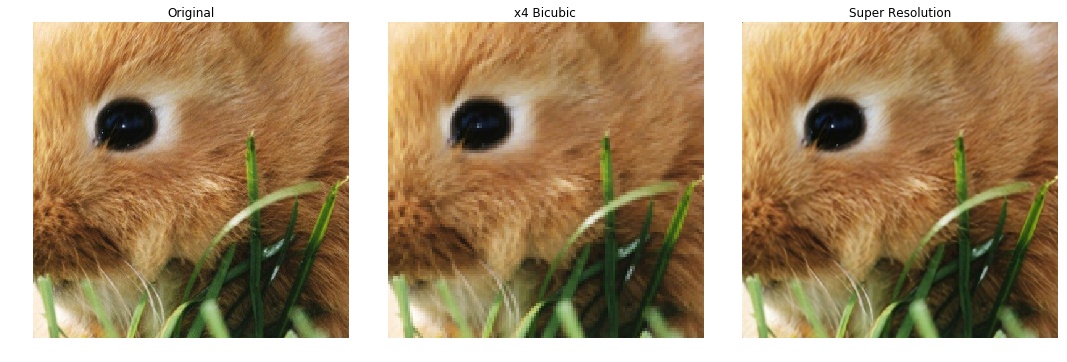

+## Results

+

+The model trained on DIV2K dataset on reconstructing 128 x 128 image by a scaling factor 4, yielded the following images.

+

+

+

+**The Model gives 32.6 PSNR on 512 x 512 image patches.**

+## Evaluate

+```bash

+python3 evaluate_psnr --lr_files "/path/to/images/*.png" --hr_files "/path/to/images/*.png"

+```

+For options, `python3 evaluate_psnr.py -h`

+## SavedModel 2.0

+Loadable SavedModel can be found at https://github.com/captain-pool/GSOC/releases/tag/1.0.0

diff --git a/modules/GSOC/E2_ESRGAN/config/config.yaml b/modules/GSOC/E2_ESRGAN/config/config.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..ab4afa5fda4c29bf064dd9425db58c5ed40206c8

--- /dev/null

+++ b/modules/GSOC/E2_ESRGAN/config/config.yaml

@@ -0,0 +1,46 @@

+checkpoint_path:

+ phase_1: "checkpoints/phase_1"

+ phase_2: "checkpoints/phase_2"

+

+dataset:

+ name: "div2k"

+ scale_method: "bicubic"

+ hr_dimension: 512

+

+print_step: 1000

+

+# The following configuration is taken from ESRGAN Paper

+# Paper Available at: https://arxiv.org/abs/1809.00219

+interpolation_parameter: 0.8 # Interpolation parameter: 0 <= value <= 1

+RDB:

+ residual_scale_beta: 0.2

+#Training

+# warmup_num_iter: 1000 # Number of steps to train the PSNR model

+batch_size: 32

+train_psnr:

+ num_steps: 600000

+ adam:

+ initial_lr: 0.0002 # Theory 0.0002

+ decay:

+ factor: 0.5

+ step: 200000 # Theory: 2 x 10^5 Steps

+ beta_1: 0.9

+ beta_2: 0.999

+train_combined:

+ perceptual_loss_type: "L1" # Can be either L1 or L2

+ num_steps: 400000

+ lambda: !!float 5e-3

+ eta: !!float 1e-2

+ adam:

+ initial_lr: !!float 5e-5

+ beta_1: 0.9

+ beta_2: 0.999

+ decay:

+ factor: 0.5

+ step:

+ - 9000

+ - 30000

+ - 50000

+ - 100000

+ - 200000

+ - 300000

diff --git a/modules/GSOC/E2_ESRGAN/config/stats.yaml b/modules/GSOC/E2_ESRGAN/config/stats.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..81ef8d3e4e6f2ebbad21b3d1d0212e0a35c2811e

--- /dev/null

+++ b/modules/GSOC/E2_ESRGAN/config/stats.yaml

@@ -0,0 +1,2 @@

+train_step_1: true

+train_step_2: true

diff --git a/modules/GSOC/E2_ESRGAN/evaluate_psnr.py b/modules/GSOC/E2_ESRGAN/evaluate_psnr.py

new file mode 100644

index 0000000000000000000000000000000000000000..cfcbdd85ba7d5784d8b2854da87118981c238862

--- /dev/null

+++ b/modules/GSOC/E2_ESRGAN/evaluate_psnr.py

@@ -0,0 +1,125 @@

+""" Evaluates the SavedModel of ESRGAN """

+import os

+import itertools

+import functools

+import argparse

+from absl import logging

+import tensorflow.compat.v2 as tf

+import tensorflow_hub as hub

+tf.enable_v2_behavior()

+

+def build_dataset(

+ lr_glob,

+ hr_glob,

+ lr_crop_size=[128, 128],

+ scale=4):

+ """

+ Builds a tf.data.Dataset from directory path.

+ Args:

+ lr_glob: Pattern to match Low Resolution images.

+ hr_glob: Pattern to match High resolution images.

+ lr_crop_size: Size of Low Resolution images to work on.

+ scale: Scaling factor of the images to work on.

+ """

+

+ def _read_images_fn(low_res_images, high_res_images):

+ for lr_image_path, hr_image_path in zip(low_res_images, high_res_images):

+ lr_image = tf.image.decode_image(tf.io.read_file(lr_image_path))

+ hr_image = tf.image.decode_image(tf.io.read_file(hr_image_path))

+ for height in range(0, lr_image.shape[0] - lr_crop_size[0] + 1, 40):

+ for width in range(0, lr_image.shape[1] - lr_crop_size[1] + 1, 40):

+ lr_sub_image = tf.image.crop_to_bounding_box(

+ lr_image,

+ height, width,

+ lr_crop_size[0], lr_crop_size[1])

+ hr_sub_image = tf.image.crop_to_bounding_box(

+ hr_image,

+ height * scale, width * scale,

+ lr_crop_size[0] * scale, lr_crop_size[1] * scale)

+ yield (tf.cast(lr_sub_image, tf.float32),

+ tf.cast(hr_sub_image, tf.float32))

+

+ hr_images = tf.io.gfile.glob(hr_glob)

+ lr_images = tf.io.gfile.glob(lr_glob)

+ hr_images = sorted(hr_images)

+ lr_images = sorted(lr_images)

+ dataset = tf.data.Dataset.from_generator(

+ functools.partial(_read_images_fn, lr_images, hr_images),

+ (tf.float32, tf.float32),

+ (tf.TensorShape([None, None, 3]), tf.TensorShape([None, None, 3])))

+ return dataset

+

+

+def main(**kwargs):

+ total = kwargs["total"]

+ dataset = build_dataset(kwargs["lr_files"], kwargs["hr_files"])

+ dataset = dataset.batch(kwargs["batch_size"])

+ count = itertools.count(start=1, step=kwargs["batch_size"])

+ os.environ["TFHUB_DOWNLOAD_PROGRESS"] = "True"

+ metrics = tf.keras.metrics.Mean()

+ for lr_image, hr_image in dataset:

+ # Loading the model multiple time is the only

+ # way to preserve the quality, since the quality

+ # degrades at every inference for no obvious reaons

+ model = hub.load(kwargs["model"])

+ super_res_image = model.call(lr_image)

+ super_res_image = tf.clip_by_value(super_res_image, 0, 255)

+ metrics(

+ tf.reduce_mean(

+ tf.image.psnr(

+ super_res_image,

+ hr_image,

+ max_val=256)))

+ c = next(count)

+ if c >= total:

+ break

+ if not (c // 100) % 10:

+ print(

+ "%d Images Processed. Mean PSNR yet: %f" %

+ (c, metrics.result().numpy()))

+ print(

+ "%d Images processed. Mean PSNR: %f" %

+ (total, metrics.result().numpy()))

+ with tf.io.gfile.GFile("PSNR_result.txt", "w") as f:

+ f.write("%d Images processed. Mean PSNR: %f" %

+ (c, metrics.result().numpy()))

+

+

+if __name__ == "__main__":

+ parser = argparse.ArgumentParser()

+ parser.add_argument(

+ "--total",

+ default=10000,

+ help="Total number of sub images to work on. (default: 1000)")

+ parser.add_argument(

+ "--batch_size",

+ default=16,

+ type=int,

+ help="Number of images per batch (default: 16)")

+ parser.add_argument(

+ "--lr_files",

+ default=None,

+ help="Pattern to match low resolution files")

+ parser.add_argument(

+ "--hr_files",

+ default=None,

+ help="Pattern to match High resolution images")

+ parser.add_argument(

+ "--model",

+ default="https://github.com/captain-pool/GSOC/"

+ "releases/download/1.0.0/esrgan.tar.gz",

+ help="URL or Path to the SavedModel")

+ parser.add_argument(

+ "--verbose", "-v",

+ default=0,

+ action="count",

+ help="Increase Verbosity of logging")

+

+ flags, unknown = parser.parse_known_args()

+ if not (flags.lr_files and flags.hr_files):

+ logging.error("Must set flag --lr_files and --hr_files")

+ sys.exit(1)

+ log_levels = [logging.FATAL, logging.WARN, logging.INFO, logging.DEBUG]

+ log_level = log_levels[min(flags.verbose, len(log_levels) - 1)]

+ logging.set_verbosity(log_level)

+ main(**vars(flags))

diff --git a/modules/GSOC/E2_ESRGAN/lib/dataset.py b/modules/GSOC/E2_ESRGAN/lib/dataset.py

new file mode 100644

index 0000000000000000000000000000000000000000..b7485b7f1347df6dd118e93e3daea2f5e7afb219

--- /dev/null

+++ b/modules/GSOC/E2_ESRGAN/lib/dataset.py

@@ -0,0 +1,185 @@

+import os

+import numpy as np

+from absl import logging

+from functools import partial

+import tensorflow as tf

+# import tensorflow_datasets as tfds

+from util.util import scale, make_tf_callable_generator

+from util.setup import ancillary_path

+import pickle

+

+""" Dataset Handlers for ESRGAN """

+

+

+def scale_down(method="bicubic", dimension=512, size=None, factor=4):

+ """ Scales down function based on the parameters provided.

+ Args:

+ method (default: bicubic): Interpolation method to be used for Scaling down the image.

+ dimension (default: 256): Dimension of the high resolution counterpart.

+ size (default: None): [height, width] of the image.

+ factor (default: 4): Factor by which the model enhances the low resolution image.

+ Returns:

+ tf.data.Dataset mappable python function based on the configuration.

+ """

+ if not size:

+ size = (dimension, dimension)

+ size_ = {"size": size}

+

+ def scale_fn(image, *args, **kwargs):

+ size = size_["size"]

+ high_resolution = image

+ # if not kwargs.get("no_random_crop", None):

+ # high_resolution = tf.image.random_crop(

+ # image, [size[0], size[1], image.shape[-1]])

+

+ low_resolution = tf.image.resize(

+ high_resolution,

+ [size[0] // factor, size[1] // factor],

+ method=method)

+ low_resolution = tf.clip_by_value(low_resolution, 0, 255)

+ high_resolution = tf.clip_by_value(high_resolution, 0, 255)

+ return low_resolution, high_resolution

+

+ scale_fn.size = size_["size"]

+ return scale_fn

+

+

+def augment_image(

+ brightness_delta=0.05,

+ contrast_factor=[0.7, 1.3],

+ saturation=[0.6, 1.6]):

+ """ Helper function used for augmentation of images in the dataset.

+ Args:

+ brightness_delta: maximum value for randomly assigning brightness of the image.

+ contrast_factor: list / tuple of minimum and maximum value of factor to set random contrast.

+ None, if not to be used.

+ saturation: list / tuple of minimum and maximum value of factor to set random saturation.

+ None, if not to be used.

+ Returns:

+ tf.data.Dataset mappable function for image augmentation

+ """

+

+ def augment_fn(data, label, *args, **kwargs):

+ # Augmenting data (~ 80%)

+

+ def augment_steps_fn(data, label):

+ # Randomly rotating image (~50%)

+ def rotate_fn(data, label):

+ times = tf.random.uniform(minval=1, maxval=4, dtype=tf.int32, shape=[])

+ return (tf.image.rot90(data, times),

+ tf.image.rot90(label, times))

+

+ data, label = tf.cond(

+ tf.less_equal(tf.random.uniform([]), 0.5),

+ lambda: rotate_fn(data, label),

+ lambda: (data, label))

+

+ # Randomly flipping image (~50%)

+ def flip_fn(data, label):

+ return (tf.image.flip_left_right(data),

+ tf.image.flip_left_right(label))

+

+ data, label = tf.cond(

+ tf.less_equal(tf.random.uniform([]), 0.5),

+ lambda: flip_fn(data, label),

+ lambda: (data, label))

+

+ return data, label

+

+ # Randomly returning unchanged data (~20%)

+ return tf.cond(

+ tf.less_equal(tf.random.uniform([]), 0.2),

+ lambda: (data, label),

+ partial(augment_steps_fn, data, label))

+

+ return augment_fn

+

+

+# def reform_dataset(dataset, types, size, num_elems=None):

+# """ Helper function to convert the output_dtype of the dataset

+# from (tf.float32, tf.uint8) to desired dtype

+# Args:

+# dataset: Source dataset(image-label dataset) to convert.

+# types: tuple / list of target datatype.

+# size: [height, width] threshold of the images.

+# num_elems: Number of Data points to store

+# Returns:

+# tf.data.Dataset with the images of dimension >= Args.size and types = Args.types

+# """

+# _carrier = {"num_elems": num_elems}

+#

+# def generator_fn():

+# for idx, data in enumerate(dataset, 1):

+# if _carrier["num_elems"]:

+# if not idx % _carrier["num_elems"]:

+# raise StopIteration

+# if data[0].shape[0] >= size[0] and data[0].shape[1] >= size[1]:

+# yield data[0], data[1]

+# else:

+# continue

+# return tf.data.Dataset.from_generator(

+# generator_fn, types, (tf.TensorShape([None, None, 3]), tf.TensorShape(None)))

+

+

+# setup scaling parameters dictionary

+mean_std_dct = {}

+mean_std_file = ancillary_path+'mean_std_lo_hi_l2.pkl'

+f = open(mean_std_file, 'rb')

+mean_std_dct_l2 = pickle.load(f)

+f.close()

+

+

+class OpdNpyDataset:

+

+ def __init__(self, filenames, hr_size, lr_size, batch_size=128):

+ self.filenames = filenames

+ self.num_files = len(filenames)

+ self.hr_size = hr_size

+ self.lr_size = lr_size

+

+ def integer_gen(limit):

+ n = 0

+ while n < limit:

+ yield n

+ n += 1

+

+ num_gen = integer_gen(self.num_files)

+ gen = make_tf_callable_generator(num_gen)

+ dataset = tf.data.Dataset.from_generator(gen, output_types=tf.int32)

+ dataset = dataset.batch(batch_size)

+ dataset = dataset.map(self.data_function, num_parallel_calls=8)

+ # These execute w/o an iteration on dataset?

+ # dataset = dataset.map(scale_down(), num_parallel_calls=1)

+ # dataset = dataset.map(augment_image(), num_parallel_calls=1)

+

+ dataset = dataset.cache()

+ dataset = dataset.prefetch(buffer_size=1)

+

+ self.dataset = dataset

+

+ def read_numpy_file_s(self, f_idxs):

+ data_s = []

+ for fi in f_idxs:

+ fname = self.filenames[fi]

+ data = np.load(fname)

+ data = data[0, ]

+ data = scale(data, 'cld_opd_dcomp', mean_std_dct)

+ data = data.astype(np.float32)

+ data_s.append(data)

+ hr_image = np.concatenate(data_s)

+ hr_image = tf.expand_dims(hr_image, axis=3)

+ hr_image = tf.image.crop_to_bounding_box(hr_image, 0, 0, self.hr_size, self.hr_size)

+ low_resolution = tf.image.resize(hr_image, [self.lr_size, self.lr_size], method='bicubic')

+ low_resolution = tf.math.multiply(low_resolution, 255.0)

+ hr_image = tf.math.multiply(hr_image, 255.0)

+ low_resolution = tf.clip_by_value(low_resolution, 0, 255)

+ high_resolution = tf.clip_by_value(hr_image, 0, 255)

+

+ low_resolution, high_resolution = augment_image()(low_resolution, high_resolution)

+

+ return low_resolution, high_resolution

+

+ @tf.function(input_signature=[tf.TensorSpec(None, tf.int32)])

+ def data_function(self, indexes):

+ out = tf.numpy_function(self.read_numpy_file_s, [indexes], [tf.float32, tf.float32])

+ return out

\ No newline at end of file

diff --git a/modules/GSOC/E2_ESRGAN/lib/model.py b/modules/GSOC/E2_ESRGAN/lib/model.py

new file mode 100644

index 0000000000000000000000000000000000000000..4bddd99c636b3bfeff49016718a406f8dbf88b22

--- /dev/null

+++ b/modules/GSOC/E2_ESRGAN/lib/model.py

@@ -0,0 +1,136 @@

+from collections import OrderedDict

+from functools import partial

+import tensorflow as tf

+from GSOC.E2_ESRGAN.lib import utils

+

+""" Keras Models for ESRGAN

+ Classes:

+ RRDBNet: Generator of ESRGAN. (Residual in Residual Network)

+ VGGArch: VGG28 Architecture making the Discriminator ESRGAN

+"""

+

+

+class RRDBNet(tf.keras.Model):

+ """ Residual in Residual Network consisting of:

+ - Convolution Layers

+ - Residual in Residual Block as the trunk of the model

+ - Pixel Shuffler layers (tf.nn.depth_to_space)

+ - Upscaling Convolutional Layers

+

+ Args:

+ out_channel: number of channels of the fake output image.

+ num_features (default: 32): number of filters to use in the convolutional layers.

+ trunk_size (default: 3): number of Residual in Residual Blocks to form the trunk.

+ growth_channel (default: 32): number of filters to use in the internal convolutional layers.

+ use_bias (default: True): boolean to indicate if bias is to be used in the conv layers.

+ """

+

+ def __init__(

+ self,

+ out_channel,

+ num_features=32,

+ trunk_size=11,

+ growth_channel=32,

+ use_bias=True,

+ first_call=True):

+ super(RRDBNet, self).__init__()

+ self.rrdb_block = partial(utils.RRDB, growth_channel, first_call=first_call)

+ conv = partial(

+ tf.keras.layers.Conv2D,

+ kernel_size=[3, 3],

+ strides=[1, 1],

+ padding="same",

+ use_bias=use_bias)

+ conv_transpose = partial(

+ tf.keras.layers.Conv2DTranspose,

+ kernel_size=[3, 3],

+ strides=[2, 2],

+ padding="same",

+ use_bias=use_bias)

+ self.conv_first = conv(filters=num_features)

+ self.rdb_trunk = tf.keras.Sequential(

+ [self.rrdb_block() for _ in range(trunk_size)])

+ self.conv_trunk = conv(filters=num_features)

+ # Upsample

+ self.upsample1 = conv_transpose(num_features)

+ self.upsample2 = conv_transpose(num_features)

+ self.conv_last_1 = conv(num_features)

+ self.conv_last_2 = conv(out_channel)

+ self.lrelu = tf.keras.layers.LeakyReLU(alpha=0.2)

+

+ # @tf.function(

+ # input_signature=[

+ # tf.TensorSpec(shape=[None, None, None, 3],

+ # dtype=tf.float32)])

+ def call(self, inputs):

+ return self.unsigned_call(inputs)

+

+ def unsigned_call(self, input_):

+ feature = self.lrelu(self.conv_first(input_))

+ trunk = self.conv_trunk(self.rdb_trunk(feature))

+ feature = trunk + feature

+ feature = self.lrelu(

+ self.upsample1(feature))

+ feature = self.lrelu(

+ self.upsample2(feature))

+ feature = self.lrelu(self.conv_last_1(feature))

+ out = self.conv_last_2(feature)

+ return out

+

+

+class VGGArch(tf.keras.Model):

+ """ Keras Model for VGG28 Architecture needed to form

+ the discriminator of the architecture.

+ Args:

+ output_shape (default: 1): output_shape of the generator

+ num_features (default: 64): number of features to be used in the convolutional layers

+ a factor of 2**i will be multiplied as per the need

+ use_bias (default: True): Boolean to indicate whether to use biases for convolution layers

+ """

+

+ def __init__(

+ self,

+ batch_size=8,

+ output_shape=1,

+ num_features=64,

+ use_bias=False):

+

+ super(VGGArch, self).__init__()

+ conv = partial(

+ tf.keras.layers.Conv2D,

+ kernel_size=[3, 3], use_bias=use_bias, padding="same")

+ batch_norm = partial(tf.keras.layers.BatchNormalization)

+ def no_batch_norm(x): return x

+ self._lrelu = tf.keras.layers.LeakyReLU(alpha=0.2)

+ self._dense_1 = tf.keras.layers.Dense(100)

+ self._dense_2 = tf.keras.layers.Dense(output_shape)

+ self._conv_layers = OrderedDict()

+ self._batch_norm = OrderedDict()

+ self._conv_layers["conv_0_0"] = conv(filters=num_features, strides=1)

+ self._conv_layers["conv_0_1"] = conv(filters=num_features, strides=2)

+ self._batch_norm["bn_0_1"] = batch_norm()

+ for i in range(1, 4):

+ for j in range(1, 3):

+ self._conv_layers["conv_%d_%d" % (i, j)] = conv(

+ filters=num_features * (2**i), strides=j)

+ self._batch_norm["bn_%d_%d" % (i, j)] = batch_norm()

+

+ def call(self, inputs):

+ return self.unsigned_call(inputs)

+ def unsigned_call(self, input_):

+

+ features = self._lrelu(self._conv_layers["conv_0_0"](input_))

+ features = self._lrelu(

+ self._batch_norm["bn_0_1"](

+ self._conv_layers["conv_0_1"](features)))

+ # VGG Trunk

+ for i in range(1, 4):

+ for j in range(1, 3):

+ features = self._lrelu(

+ self._batch_norm["bn_%d_%d" % (i, j)](

+ self._conv_layers["conv_%d_%d" % (i, j)](features)))

+

+ flattened = tf.keras.layers.Flatten()(features)

+ dense = self._lrelu(self._dense_1(flattened))

+ out = self._dense_2(dense)

+ return out

diff --git a/modules/GSOC/E2_ESRGAN/lib/settings.py b/modules/GSOC/E2_ESRGAN/lib/settings.py

new file mode 100644

index 0000000000000000000000000000000000000000..42ccf98612dc3e6a94c53b89dbdbb7dabb1a851a

--- /dev/null

+++ b/modules/GSOC/E2_ESRGAN/lib/settings.py

@@ -0,0 +1,55 @@

+import os

+import yaml

+

+

+def singleton(cls):

+ instances = {}

+

+ def getinstance(*args, **kwargs):

+ if cls not in instances:

+ instances[cls] = cls(*args, **kwargs)

+ return instances[cls]

+ return getinstance

+

+

+@singleton

+class Settings(object):

+ def __init__(self, filename="config.yaml"):

+ self.__path = os.path.abspath(filename)

+

+ @property

+ def path(self):

+ return os.path.dirname(self.__path)

+

+ def __getitem__(self, index):

+ with open(self.__path, "r") as file_:

+ return yaml.load(file_.read(), Loader=yaml.FullLoader)[index]

+

+ def get(self, index, default=None):

+ with open(self.__path, "r") as file_:

+ return yaml.load(

+ file_.read(),

+ Loader=yaml.FullLoader).get(

+ index,

+ default)

+

+

+class Stats(object):

+ def __init__(self, filename="stats.yaml"):

+ if os.path.exists(filename):

+ with open(filename, "r") as file_:

+ self.__data = yaml.load(file_.read(), Loader=yaml.FullLoader)

+ else:

+ self.__data = {}

+ self.file = filename

+

+ def get(self, index, default=None):

+ self.__data.get(index, default)

+

+ def __getitem__(self, index):

+ return self.__data[index]

+

+ def __setitem__(self, index, data):

+ self.__data[index] = data

+ with open(self.file, "w") as file_:

+ yaml.dump(self.__data, file_, default_flow_style=False)

diff --git a/modules/GSOC/E2_ESRGAN/lib/train.py b/modules/GSOC/E2_ESRGAN/lib/train.py

new file mode 100644

index 0000000000000000000000000000000000000000..60710ceb72e5828182018e3cfc3097e6bca5b1ad

--- /dev/null

+++ b/modules/GSOC/E2_ESRGAN/lib/train.py

@@ -0,0 +1,314 @@

+import os

+import time

+from functools import partial

+# from absl import logging

+import logging

+import tensorflow as tf

+# from lib import utils, dataset

+from GSOC.E2_ESRGAN.lib import utils, dataset

+import glob

+

+logging.basicConfig(filename='/Users/tomrink/esrgan_log.txt', level=logging.DEBUG)

+

+

+class Trainer(object):

+ """ Trainer class for ESRGAN """

+

+ def __init__(

+ self,

+ summary_writer,

+ summary_writer_2,

+ settings,

+ model_dir='/Users/tomrink/tf_model_esgan/',

+ data_dir=None,

+ manual=False,

+ strategy=None):

+ """ Setup the values and variables for Training.

+ Args:

+ summary_writer: tf.summary.SummaryWriter object to write summaries for Tensorboard.

+ settings: settings object for fetching data from config files.

+ data_dir (default: None): path where the data downloaded should be stored / accessed.

+ manual (default: False): boolean to represent if data_dir is a manual dir.

+ """

+ self.settings = settings

+ self.model_dir = model_dir

+ self.summary_writer = summary_writer

+ self.summary_writer_2 = summary_writer_2

+ self.strategy = strategy

+ dataset_args = self.settings["dataset"]

+ self.batch_size = self.settings["batch_size"]

+ hr_size = dataset_args['hr_dimension']

+ lr_size = int(hr_size // 4)

+

+ filenames = glob.glob('/Users/tomrink/data/opd_singles/valid_ires_??1?.npy')

+ self.dataset = dataset.OpdNpyDataset(filenames, hr_size, lr_size, batch_size=self.batch_size).dataset

+ print('OPD dataset initialized...')

+

+ def warmup_generator(self, generator):

+ """ Training on L1 Loss to warmup the Generator.

+

+ Minimizing the L1 Loss will reduce the Peak Signal to Noise Ratio (PSNR)

+ of the generated image from the generator.

+ This trained generator is then used to bootstrap the training of the

+ GAN, creating better image inputs instead of random noises.

+ Args:

+ generator: Model Object for the Generator

+ """

+ # Loading up phase parameters

+ print('begin warmup_generator...')

+ warmup_num_iter = self.settings.get("warmup_num_iter", None)

+ phase_args = self.settings["train_psnr"]

+ decay_params = phase_args["adam"]["decay"]

+ decay_step = decay_params["step"]

+ decay_factor = decay_params["factor"]

+ total_steps = phase_args["num_steps"]

+ metric = tf.keras.metrics.Mean()

+ psnr_metric = tf.keras.metrics.Mean()

+

+ # Generator Optimizer

+ G_optimizer = tf.optimizers.Adam(

+ learning_rate=phase_args["adam"]["initial_lr"],

+ beta_1=phase_args["adam"]["beta_1"],

+ beta_2=phase_args["adam"]["beta_2"])

+

+ checkpoint = tf.train.Checkpoint(

+ G=generator,

+ G_optimizer=G_optimizer)

+

+ status = utils.load_checkpoint(checkpoint, "phase_1", self.model_dir)

+ logging.debug("phase_1 status object: {}".format(status))

+ previous_loss = 0

+ start_time = time.time()

+ # Training starts

+

+ def _step_fn(image_lr, image_hr):

+ logging.debug("Starting Distributed Step")

+ with tf.GradientTape() as tape:

+ fake = generator.unsigned_call(image_lr)

+ loss = utils.pixel_loss(image_hr, fake) * (1.0 / self.batch_size)

+ psnr_metric(tf.reduce_mean(tf.image.psnr(fake, image_hr, max_val=256.0)))

+ # gen_vars = list(set(generator.trainable_variables))

+ gen_vars = generator.trainable_variables

+ gradient = tape.gradient(loss, gen_vars)

+ G_optimizer.apply_gradients(zip(gradient, gen_vars))

+ mean_loss = metric(loss)

+ logging.debug("Ending Distributed Step")

+ return tf.cast(G_optimizer.iterations, tf.float32)

+

+ @tf.function

+ def train_step(image_lr, image_hr):

+ # distributed_metric = self.strategy.experimental_run_v2(_step_fn, args=[image_lr, image_hr])

+ distributed_metric = _step_fn(image_lr, image_hr)

+ # mean_metric = self.strategy.reduce(tf.distribute.ReduceOp.MEAN, distributed_metric, axis=None)

+ # return mean_metric

+ return distributed_metric

+

+ for image_lr, image_hr in self.dataset:

+ print(image_lr.shape, image_hr.shape)

+ num_steps = train_step(image_lr, image_hr)

+

+ if num_steps >= total_steps:

+ return

+ if status:

+ status.assert_consumed()

+ logging.info(

+ "consumed checkpoint for phase_1 successfully")

+ status = None

+

+ if not num_steps % decay_step: # Decay Learning Rate

+ logging.debug(

+ "Learning Rate: %s" %

+ G_optimizer.learning_rate.numpy)

+ G_optimizer.learning_rate.assign(

+ G_optimizer.learning_rate * decay_factor)

+ logging.debug(

+ "Decayed Learning Rate by %f."

+ "Current Learning Rate %s" % (

+ decay_factor, G_optimizer.learning_rate))

+ with self.summary_writer.as_default():

+ tf.summary.scalar(

+ "warmup_loss", metric.result(), step=G_optimizer.iterations)

+ tf.summary.scalar("mean_psnr", psnr_metric.result(), G_optimizer.iterations)

+

+ # if not num_steps % self.settings["print_step"]: # test

+ if True:

+ logging.info(

+ "[WARMUP] Step: {}\tGenerator Loss: {}"

+ "\tPSNR: {}\tTime Taken: {} sec".format(

+ num_steps,

+ metric.result(),

+ psnr_metric.result(),

+ time.time() -

+ start_time))

+ if psnr_metric.result() > previous_loss:

+ utils.save_checkpoint(checkpoint, "phase_1", self.model_dir)

+ previous_loss = psnr_metric.result()

+ start_time = time.time()

+

+ def train_gan(self, generator, discriminator):

+ """ Implements Training routine for ESRGAN

+ Args:

+ generator: Model object for the Generator

+ discriminator: Model object for the Discriminator

+ """

+ print('begin train_gan...')

+ phase_args = self.settings["train_combined"]

+ decay_args = phase_args["adam"]["decay"]

+ decay_factor = decay_args["factor"]

+ decay_steps = decay_args["step"]

+ lambda_ = phase_args["lambda"]

+ hr_dimension = self.settings["dataset"]["hr_dimension"]

+ eta = phase_args["eta"]

+ total_steps = phase_args["num_steps"]

+ optimizer = partial(

+ tf.optimizers.Adam,

+ learning_rate=phase_args["adam"]["initial_lr"],

+ beta_1=phase_args["adam"]["beta_1"],

+ beta_2=phase_args["adam"]["beta_2"])

+

+ G_optimizer = optimizer()

+ D_optimizer = optimizer()

+

+ ra_gen = utils.RelativisticAverageLoss(discriminator, type_="G")

+ ra_disc = utils.RelativisticAverageLoss(discriminator, type_="D")

+

+ # The weights of generator trained during Phase #1

+ # is used to initialize or "hot start" the generator

+ # for phase #2 of training

+ status = None

+ checkpoint = tf.train.Checkpoint(

+ G=generator,

+ G_optimizer=G_optimizer,

+ D=discriminator,

+ D_optimizer=D_optimizer)

+ if not tf.io.gfile.exists(

+ os.path.join(

+ self.model_dir,

+ self.settings["checkpoint_path"]["phase_2"],

+ "checkpoint")):

+ hot_start = tf.train.Checkpoint(

+ G=generator,

+ G_optimizer=G_optimizer)

+ status = utils.load_checkpoint(hot_start, "phase_1", self.model_dir)

+ # consuming variable from checkpoint

+ G_optimizer.learning_rate.assign(phase_args["adam"]["initial_lr"])

+ else:

+ status = utils.load_checkpoint(checkpoint, "phase_2", self.model_dir)

+

+ logging.debug("phase status object: {}".format(status))

+

+ gen_metric = tf.keras.metrics.Mean()

+ disc_metric = tf.keras.metrics.Mean()

+ psnr_metric = tf.keras.metrics.Mean()

+

+ # May need to create a Perceptual Model for Optical Depth?

+ # logging.debug("Loading Perceptual Model")

+ # perceptual_loss = utils.PerceptualLoss(

+ # weights="imagenet",

+ # input_shape=[hr_dimension, hr_dimension, 1],

+ # loss_type=phase_args["perceptual_loss_type"])

+ # logging.debug("Loaded Model")

+

+ def _step_fn(image_lr, image_hr):

+ logging.debug("Starting Distributed Step")

+ with tf.GradientTape() as gen_tape, tf.GradientTape() as disc_tape:

+ fake = generator.unsigned_call(image_lr)

+ logging.debug("Fetched Generator Fake")

+ fake = utils.preprocess_input(fake)

+ image_lr = utils.preprocess_input(image_lr)

+ image_hr = utils.preprocess_input(image_hr)

+ # percep_loss = tf.reduce_mean(perceptual_loss(image_hr, fake))

+ # logging.debug("Calculated Perceptual Loss")

+ l1_loss = utils.pixel_loss(image_hr, fake)

+ logging.debug("Calculated Pixel Loss")

+ loss_RaG = ra_gen(image_hr, fake)

+ logging.debug("Calculated Relativistic"

+ "Averate (RA) Loss for Generator")

+ disc_loss = ra_disc(image_hr, fake)

+ logging.debug("Calculated RA Loss Discriminator")

+ # gen_loss = percep_loss + lambda_ * loss_RaG + eta * l1_loss

+ gen_loss = lambda_ * loss_RaG + eta * l1_loss

+ logging.debug("Calculated Generator Loss")

+ disc_metric(disc_loss)

+ gen_metric(gen_loss)

+ gen_loss = gen_loss * (1.0 / self.batch_size)

+ disc_loss = disc_loss * (1.0 / self.batch_size)

+ psnr_metric(tf.reduce_mean(tf.image.psnr(fake, image_hr, max_val=256.0)))

+

+ disc_grad = disc_tape.gradient(disc_loss, discriminator.trainable_variables)

+ logging.debug("Calculated gradient for Discriminator")

+

+ D_optimizer.apply_gradients(zip(disc_grad, discriminator.trainable_variables))

+ logging.debug("Applied gradients to Discriminator")

+

+ gen_grad = gen_tape.gradient(gen_loss, generator.trainable_variables)

+ logging.debug("Calculated gradient for Generator")

+

+ G_optimizer.apply_gradients(zip(gen_grad, generator.trainable_variables))

+ logging.debug("Applied gradients to Generator")

+

+ return tf.cast(D_optimizer.iterations, tf.float32)

+

+ @tf.function

+ def train_step(image_lr, image_hr):

+ # distributed_iterations = self.strategy.experimental_run_v2(step_fn, args=(image_lr, image_hr))

+ distributed_iterations = _step_fn(image_lr, image_hr)

+ # num_steps = self.strategy.reduce(tf.distribute.ReduceOp.MEAN, distributed_iterations, axis=None)

+ # return num_steps

+ return distributed_iterations

+ start = time.time()

+ last_psnr = 0

+

+ for image_lr, image_hr in self.dataset:

+ print(image_lr.shape, image_hr.shape)

+ num_step = train_step(image_lr, image_hr)

+ if num_step >= total_steps:

+ return

+ if status:

+ status.assert_consumed()

+ logging.info("consumed checkpoint successfully!")

+ status = None

+

+ # Decaying Learning Rate

+ for _step in decay_steps.copy():

+ if num_step >= _step:

+ decay_steps.pop(0)

+ g_current_lr = self.strategy.reduce(

+ tf.distribute.ReduceOp.MEAN,

+ G_optimizer.learning_rate, axis=None)

+

+ d_current_lr = self.strategy.reduce(

+ tf.distribute.ReduceOp.MEAN,

+ D_optimizer.learning_rate, axis=None)

+

+ logging.debug(

+ "Current LR: G = %s, D = %s" %

+ (g_current_lr, d_current_lr))

+ logging.debug(

+ "[Phase 2] Decayed Learing Rate by %f." % decay_factor)

+ G_optimizer.learning_rate.assign(G_optimizer.learning_rate * decay_factor)

+ D_optimizer.learning_rate.assign(D_optimizer.learning_rate * decay_factor)

+

+ # Writing Summary

+ with self.summary_writer_2.as_default():

+ tf.summary.scalar(

+ "gen_loss", gen_metric.result(), step=D_optimizer.iterations)

+ tf.summary.scalar(

+ "disc_loss", disc_metric.result(), step=D_optimizer.iterations)

+ tf.summary.scalar("mean_psnr", psnr_metric.result(), step=D_optimizer.iterations)

+

+ # Logging and Checkpointing

+ # if not num_step % self.settings["print_step"]: # testing

+ if True:

+ logging.info(

+ "Step: {}\tGen Loss: {}\tDisc Loss: {}"

+ "\tPSNR: {}\tTime Taken: {} sec".format(

+ num_step,

+ gen_metric.result(),

+ disc_metric.result(),

+ psnr_metric.result(),

+ time.time() - start))

+ # if psnr_metric.result() > last_psnr:

+ last_psnr = psnr_metric.result()

+ utils.save_checkpoint(checkpoint, "phase_2", self.model_dir)

+ start = time.time()

diff --git a/modules/GSOC/E2_ESRGAN/lib/utils.py b/modules/GSOC/E2_ESRGAN/lib/utils.py

new file mode 100644

index 0000000000000000000000000000000000000000..e2c497b297e54a9af0df48aa4221d5fa102cb719

--- /dev/null

+++ b/modules/GSOC/E2_ESRGAN/lib/utils.py

@@ -0,0 +1,302 @@

+import os

+from functools import partial

+import tensorflow as tf

+# from absl import logging

+import logging

+from GSOC.E2_ESRGAN.lib import settings

+

+""" Utility functions needed for training ESRGAN model. """

+

+# Checkpoint Utilities

+

+

+def save_checkpoint(checkpoint, training_phase, basepath=""):

+ """ Saves checkpoint.

+ Args:

+ checkpoint: tf.train.Checkpoint object

+ training_phase: The training phase of the model to load/store the checkpoint for.

+ can be one of the two "phase_1" or "phase_2"

+ basepath: Base path to load checkpoints from.

+ """

+ dir_ = settings.Settings()["checkpoint_path"][training_phase]

+ if basepath:

+ dir_ = os.path.join(basepath, dir_)

+ dir_ = os.path.join(dir_, os.path.basename(dir_))

+ checkpoint.save(file_prefix=dir_)

+ logging.debug("Prefix: %s. checkpoint saved successfully!" % dir_)

+

+

+def load_checkpoint(checkpoint, training_phase, basepath=""):

+ """ Saves checkpoint.

+ Args:

+ checkpoint: tf.train.Checkpoint object

+ training_phase: The training phase of the model to load/store the checkpoint for.

+ can be one of the two "phase_1" or "phase_2"

+ basepath: Base Path to load checkpoints from.

+ """

+ logging.info("Loading check point for: %s" % training_phase)

+ dir_ = settings.Settings()["checkpoint_path"][training_phase]

+ if basepath:

+ dir_ = os.path.join(basepath, dir_)

+ if tf.io.gfile.exists(os.path.join(dir_, "checkpoint")):

+ logging.info("Found checkpoint at: %s" % dir_)

+ status = checkpoint.restore(tf.train.latest_checkpoint(dir_))

+ return status

+

+# Network Interpolation utility

+

+

+def interpolate_generator(

+ generator_fn,

+ discriminator,

+ alpha,

+ dimension,

+ factor=4,

+ basepath=""):

+ """ Interpolates between the weights of the PSNR model and GAN model

+

+ Refer to Section 3.4 of https://arxiv.org/pdf/1809.00219.pdf (Xintao et. al.)

+

+ Args:

+ generator_fn: function which returns the keras model the generator used.

+ discriminiator: Keras model of the discriminator.

+ alpha: interpolation parameter between both the weights of both the models.

+ dimension: dimension of the high resolution image

+ factor: scale factor of the model

+ basepath: Base directory to load checkpoints from.

+ Returns:

+ Keras model of a generator with weights interpolated between the PSNR and GAN model.

+ """

+ assert 0 <= alpha <= 1

+ size = dimension

+ if not tf.nest.is_nested(dimension):

+ size = [dimension, dimension]

+ logging.debug("Interpolating generator. Alpha: %f" % alpha)

+ optimizer = partial(tf.keras.optimizers.Adam)

+ gan_generator = generator_fn()

+ psnr_generator = generator_fn()

+ # building generators

+ gan_generator(tf.random.normal(

+ [1, size[0] // factor, size[1] // factor, 1]))

+ psnr_generator(tf.random.normal(

+ [1, size[0] // factor, size[1] // factor, 1]))

+

+ phase_1_ckpt = tf.train.Checkpoint(

+ G=psnr_generator, G_optimizer=optimizer())

+ phase_2_ckpt = tf.train.Checkpoint(

+ G=gan_generator,

+ G_optimizer=optimizer(),

+ D=discriminator,

+ D_optimizer=optimizer())

+

+

+ load_checkpoint(phase_1_ckpt, "phase_1", basepath)

+ load_checkpoint(phase_2_ckpt, "phase_2", basepath)

+

+ # Consuming Checkpoint

+ logging.debug("Consuming Variables: Adervsarial generator")

+ gan_generator.unsigned_call(tf.random.normal(

+ [1, size[0] // factor, size[1] // factor, 1]))

+ input_layer = tf.keras.Input(shape=[None, None, 1], name="input_0")

+ output = gan_generator(input_layer)

+ gan_generator = tf.keras.Model(

+ inputs=[input_layer],

+ outputs=[output])

+

+ logging.debug("Consuming Variables: PSNR generator")

+ psnr_generator.unsigned_call(tf.random.normal(

+ [1, size[0] // factor, size[1] // factor, 1]))

+

+ for variables_1, variables_2 in zip(

+ gan_generator.trainable_variables, psnr_generator.trainable_variables):

+ variables_1.assign((1 - alpha) * variables_2 + alpha * variables_1)

+

+ return gan_generator

+

+# Losses

+

+

+def preprocess_input(image):

+ image = image[..., ::-1]

+ # mean = -tf.constant([103.939, 116.779, 123.68])

+ mean = -tf.constant([0.0])

+ return tf.nn.bias_add(image, mean)

+

+

+def PerceptualLoss(weights=None, input_shape=None, loss_type="L1"):

+ """ Perceptual Loss using VGG19

+ Args:

+ weights: Weights to be loaded.

+ input_shape: Shape of input image.

+ loss_type: Loss type for features. (L1 / L2)

+ """

+ vgg_model = tf.keras.applications.vgg19.VGG19(

+ input_shape=input_shape, weights=weights, include_top=False)

+ for layer in vgg_model.layers:

+ layer.trainable = False

+ # Removing Activation Function

+ vgg_model.get_layer("block5_conv4").activation = lambda x: x

+ phi = tf.keras.Model(

+ inputs=[vgg_model.input],

+ outputs=[

+ vgg_model.get_layer("block5_conv4").output])

+

+ def loss(y_true, y_pred):

+ if loss_type.lower() == "l1":

+ return tf.compat.v1.losses.absolute_difference(phi(y_true), phi(y_pred))

+

+ if loss_type.lower() == "l2":

+ return tf.reduce_mean(

+ tf.reduce_mean(

+ (phi(y_true) - phi(y_pred))**2,

+ axis=0))

+ raise ValueError(

+ "Loss Function: \"%s\" not defined for Perceptual Loss" %

+ loss_type)

+ return loss

+

+

+def pixel_loss(y_true, y_pred):

+ y_true = tf.cast(y_true, tf.float32)

+ y_pred = tf.cast(y_pred, tf.float32)

+ return tf.reduce_mean(tf.reduce_mean(tf.abs(y_true - y_pred), axis=0))

+

+

+def RelativisticAverageLoss(non_transformed_disc, type_="G"):

+ """ Relativistic Average Loss based on RaGAN

+ Args:

+ non_transformed_disc: non activated discriminator Model

+ type_: type of loss to Ra loss to produce.

+ 'G': Relativistic average loss for generator

+ 'D': Relativistic average loss for discriminator

+ """

+ loss = None

+

+ def D_Ra(x, y):

+ return non_transformed_disc(

+ x) - tf.reduce_mean(non_transformed_disc(y))

+

+ def loss_D(y_true, y_pred):

+ """

+ Relativistic Average Loss for Discriminator

+ Args:

+ y_true: Real Image

+ y_pred: Generated Image

+ """

+ real_logits = D_Ra(y_true, y_pred)

+ fake_logits = D_Ra(y_pred, y_true)

+ real_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(

+ labels=tf.ones_like(real_logits), logits=real_logits))

+ fake_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(

+ labels=tf.zeros_like(fake_logits), logits=fake_logits))

+ return real_loss + fake_loss

+

+ def loss_G(y_true, y_pred):

+ """

+ Relativistic Average Loss for Generator

+ Args:

+ y_true: Real Image

+ y_pred: Generated Image

+ """

+ real_logits = D_Ra(y_true, y_pred)

+ fake_logits = D_Ra(y_pred, y_true)

+ real_loss = tf.nn.sigmoid_cross_entropy_with_logits(

+ labels=tf.zeros_like(real_logits), logits=real_logits)

+ fake_loss = tf.nn.sigmoid_cross_entropy_with_logits(

+ labels=tf.ones_like(fake_logits), logits=fake_logits)

+ return real_loss + fake_loss

+ if type_ == "G":

+ loss = loss_G

+ elif type_ == "D":

+ loss = loss_D

+ return loss

+

+

+# Strategy Utils

+

+def assign_to_worker(use_tpu):

+ if use_tpu:

+ return "/job:worker"

+ return ""

+

+

+class SingleDeviceStrategy(object):

+ """ Dummy Strategy when Outside TPU """

+

+ def __enter__(self, *args, **kwargs):

+ pass

+

+ def __exit__(self, *args, **kwargs):

+ pass

+

+ def experimental_distribute_dataset(self, dataset, *args, **kwargs):

+ return dataset

+

+ def experimental_run_v2(self, fn, args, kwargs):

+ return fn(*args, **kwargs)

+

+ def reduce(reduction_type, distributed_data, axis):

+ return distributed_data

+

+ def scope(self):

+ return self

+

+

+# Model Utils

+

+

+class RDB(tf.keras.layers.Layer):

+ """ Residual Dense Block Layer """

+

+ def __init__(self, out_features=32, bias=True, first_call=True):

+ super(RDB, self).__init__()

+ _create_conv2d = partial(

+ tf.keras.layers.Conv2D,

+ out_features,

+ kernel_size=[3, 3],

+ kernel_initializer="he_normal",

+ bias_initializer="zeros",

+ strides=[1, 1], padding="same", use_bias=bias)

+ self._conv2d_layers = {

+ "conv_1": _create_conv2d(),

+ "conv_2": _create_conv2d(),

+ "conv_3": _create_conv2d(),

+ "conv_4": _create_conv2d(),

+ "conv_5": _create_conv2d()}

+ self._lrelu = tf.keras.layers.LeakyReLU(alpha=0.2)

+ self._beta = settings.Settings()["RDB"].get("residual_scale_beta", 0.2)

+ self._first_call = first_call

+

+ def call(self, input_):

+ x1 = self._lrelu(self._conv2d_layers["conv_1"](input_))

+ x2 = self._lrelu(self._conv2d_layers["conv_2"](

+ tf.concat([input_, x1], -1)))

+ x3 = self._lrelu(self._conv2d_layers["conv_3"](

+ tf.concat([input_, x1, x2], -1)))

+ x4 = self._lrelu(self._conv2d_layers["conv_4"](

+ tf.concat([input_, x1, x2, x3], -1)))

+ x5 = self._conv2d_layers["conv_5"](tf.concat([input_, x1, x2, x3, x4], -1))

+ if self._first_call:

+ logging.debug("Initializing with MSRA")

+ for _, layer in self._conv2d_layers.items():

+ for variable in layer.trainable_variables:

+ variable.assign(0.1 * variable)

+ self._first_call = False

+ return input_ + self._beta * x5

+

+

+class RRDB(tf.keras.layers.Layer):

+ """ Residual in Residual Block Layer """

+

+ def __init__(self, out_features=32, first_call=True):

+ super(RRDB, self).__init__()

+ self.RDB1 = RDB(out_features, first_call=first_call)

+ self.RDB2 = RDB(out_features, first_call=first_call)

+ self.RDB3 = RDB(out_features, first_call=first_call)

+ self.beta = settings.Settings()["RDB"].get("residual_scale_beta", 0.2)

+

+ def call(self, input_):

+ out = self.RDB1(input_)

+ out = self.RDB2(out)

+ out = self.RDB3(out)

+ return input_ + self.beta * out

diff --git a/modules/GSOC/E2_ESRGAN/main.py b/modules/GSOC/E2_ESRGAN/main.py

new file mode 100644

index 0000000000000000000000000000000000000000..d49e4fbdf7546860fb83fe923c44212a6d415c13

--- /dev/null

+++ b/modules/GSOC/E2_ESRGAN/main.py

@@ -0,0 +1,169 @@

+import os

+from functools import partial

+import argparse

+# from absl import logging

+import logging

+from GSOC.E2_ESRGAN.lib import settings, train, model, utils

+# from tensorflow.python.eager import profiler

+# import tensorflow.compat.v2 as tf

+# tf.enable_v2_behavior()

+import tensorflow as tf

+tf.compat.v1.disable_v2_behavior

+

+logging.basicConfig(filename='/Users/tomrink/esrgan_log.txt', level=logging.DEBUG)

+

+

+""" Enhanced Super Resolution GAN.

+ Citation:

+ @article{DBLP:journals/corr/abs-1809-00219,

+ author = {Xintao Wang and

+ Ke Yu and

+ Shixiang Wu and

+ Jinjin Gu and

+ Yihao Liu and

+ Chao Dong and

+ Chen Change Loy and

+ Yu Qiao and

+ Xiaoou Tang},